Soil Fertility, Fertilizers and Crop Nutrition: Past, Present, and Future

Part One: A Look Back

Society has made (and will be making) significant demands on agriculture in the not-to-distant future. Meeting future sustainability goals and environmental regulations while simultaneously continuing to meet requirements for food, feed, fuel, and fiber requires a firm understanding of how “we” have collectively arrived at our current status as it relates to our fertility principles and beliefs as well as the processes that address them. We have advanced far from the earliest thoughts on rudimentary plant nutrition to a sophisticated science of prescription crop nutrition. This article represents the first part of a series that intends to describe crop nutrition and fertilizers from where we have been to where the authors believe that we will likely need to be prepared to go if we are to support world demands into the foreseeable future. Earn 1.5 CEUs in Nutrient Management by reading this article and taking the quiz.

Recent studies estimate more than half of the world food supply produced each year is attributable to use of mineral fertilizers. Not only are fertilizers critical to crop productivity, but the role of proper plant nutrition in improving crop quality, pest resistance, stress tolerance, and soil, human, and animal health is well documented. It is easy to overlook the importance of the nutrient status of the top 6 inches of soil for sustaining civilization. It’s been said that “if agriculture goes wrong, nothing else will have a chance to go right.” It is clear from history that without a stable food supply, it is not possible to have stable cities, schools, or economies. Food insecurity too often is expressed as societal unrest and human misery.

The recorded history of cultivated agriculture begins in the Mesopotamian River basins of modern Iraq and the Nile Valley of Egypt. During the Greco-Roman times, crop production reached a relatively high degree of sophistication and productivity. There was good understanding of the value of animal and human composts, manure, crop residues, crop rotation, limestone, ash, green manures, and coastal seaweed. Early explanations for plant nutrition included notions such as plants having souls and absorbing earth through roots. Despite early empirical observations, a modern understanding of plant nutrition and soil fertility remained a mystery for many centuries.

A broader understanding of mineral nutrition developed in the 19th century. Early work by Saussure (1804) helped establish that plants require mineral nutrients acquired through the roots. Carl Sprengel developed a list of 20 elements that he considered to be plant nutrients and suggested the “Law of the Minimum” (1828), which was later made popular by Justus von Leibig between 1840 to 1855. These new concepts in plant nutrition were hotly debated, but their practical relevance for farmers swept quickly around the world as their application was easily observed in greater crop yields.

By the early 1800s, it was becoming understood that proper use of soil amendments benefited plant growth. As the benefits of plant nutrients and the value of crop response to nutrient additions became recognized, the search for nutrient sources exploded.

Phosphate (P)

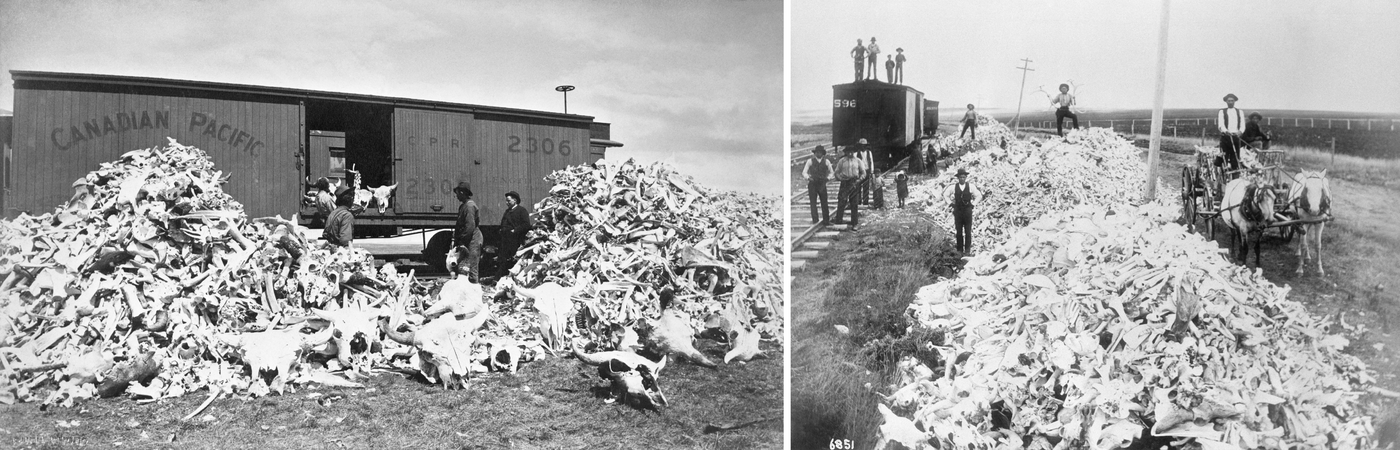

The agronomic value of ground bones was recognized early as a nutrient source and demand grew quickly. Unprocessed bones [containing the P-rich mineral hydroxyapatite; Ca5F(PO4)3OH] were crushed and applied to the soil at a rate of 1 ton/ac or more (Figures 1 and 2). In England, the demand for bones outstripped the domestic supply, and by 1815, bones were imported from other countries in Europe, reaching a maximum of 30,000 tons/year (Nelson, 1990). This led the famous plant nutritionist Justus von Leibig, to complain:

“England is robbing all other countries of the condition of their fertility. Already... she has turned up the battlefields of Leipzig, of Waterloo and of the Crimea; already from the catacombs of Sicily she has carried away the skeletons of many successive generations. Annually she removes from the shores of other countries to her own, the manurial equivalent of three millions and a half of men, whom she takes from us the means of supporting, and squanders down her sewers to the sea. Like a vampire, she hangs around the neck of Europe — nay, of the entire world — and suck the heart blood from nations...”.

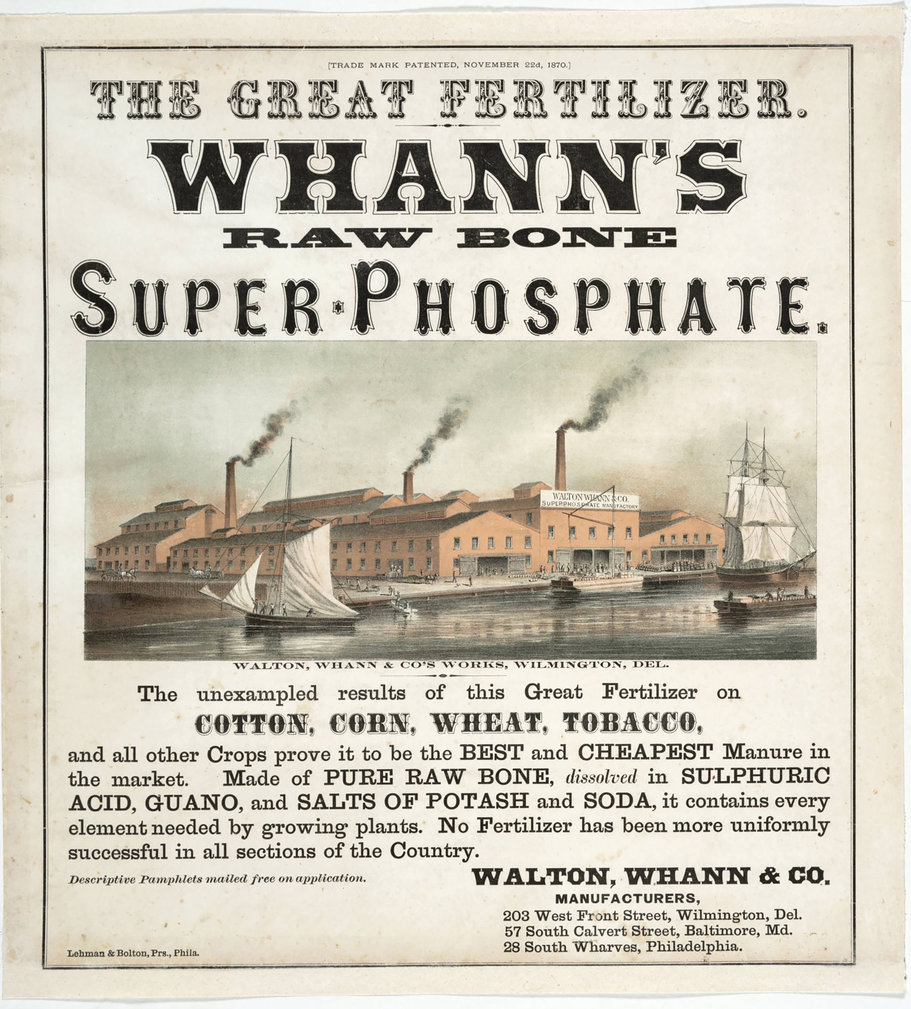

The observation that bones were not equally effective as a nutrient source led John Lawes to experiment with treating bones with sulfuric acid. This acidification process converted insoluble hydroxyapatite into relatively soluble monocalcium phosphate [Ca(H2PO4)2], which proved to be very effective for stimulating crop growth. In 1842, he was granted a patent for “superphosphate of lime,” composed of monocalcium phosphate and calcium sulfate (16 20% P2O5). Superphosphate manufacturing from bones quickly spread around the world and marked the beginning of the modern fertilizer industry.

As animal bones’ value as a crop P source was recognized, meat-packing companies began marketing untreated bone meal as a fertilizer. Meat-packing companies later became leading producers of bone-derived superphosphate fertilizer. Other P sources (such as manure, guano, ground phosphate rock, and basic slag) remained available in limited quantities during this time. Bone meal is still used today and promoted as an organic P source.

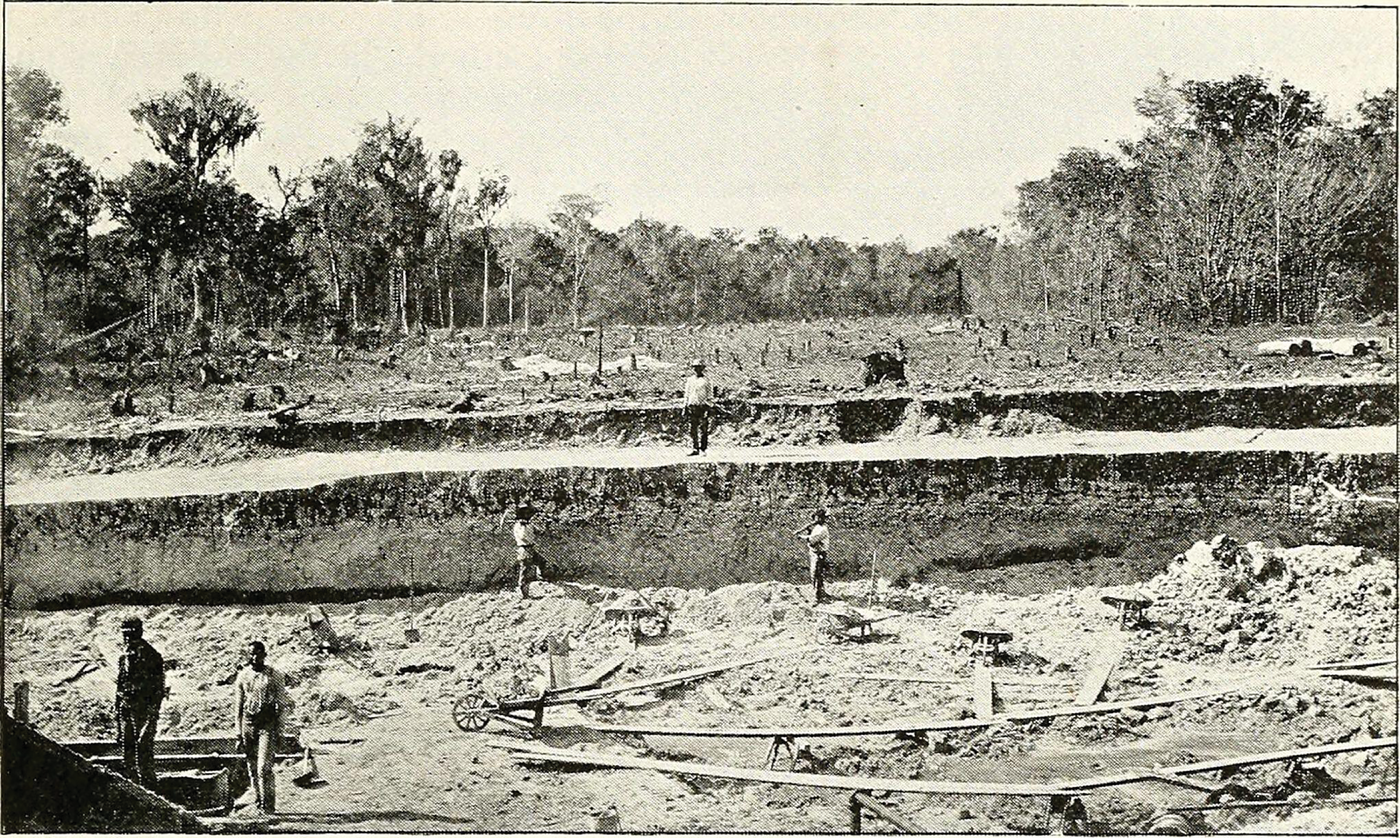

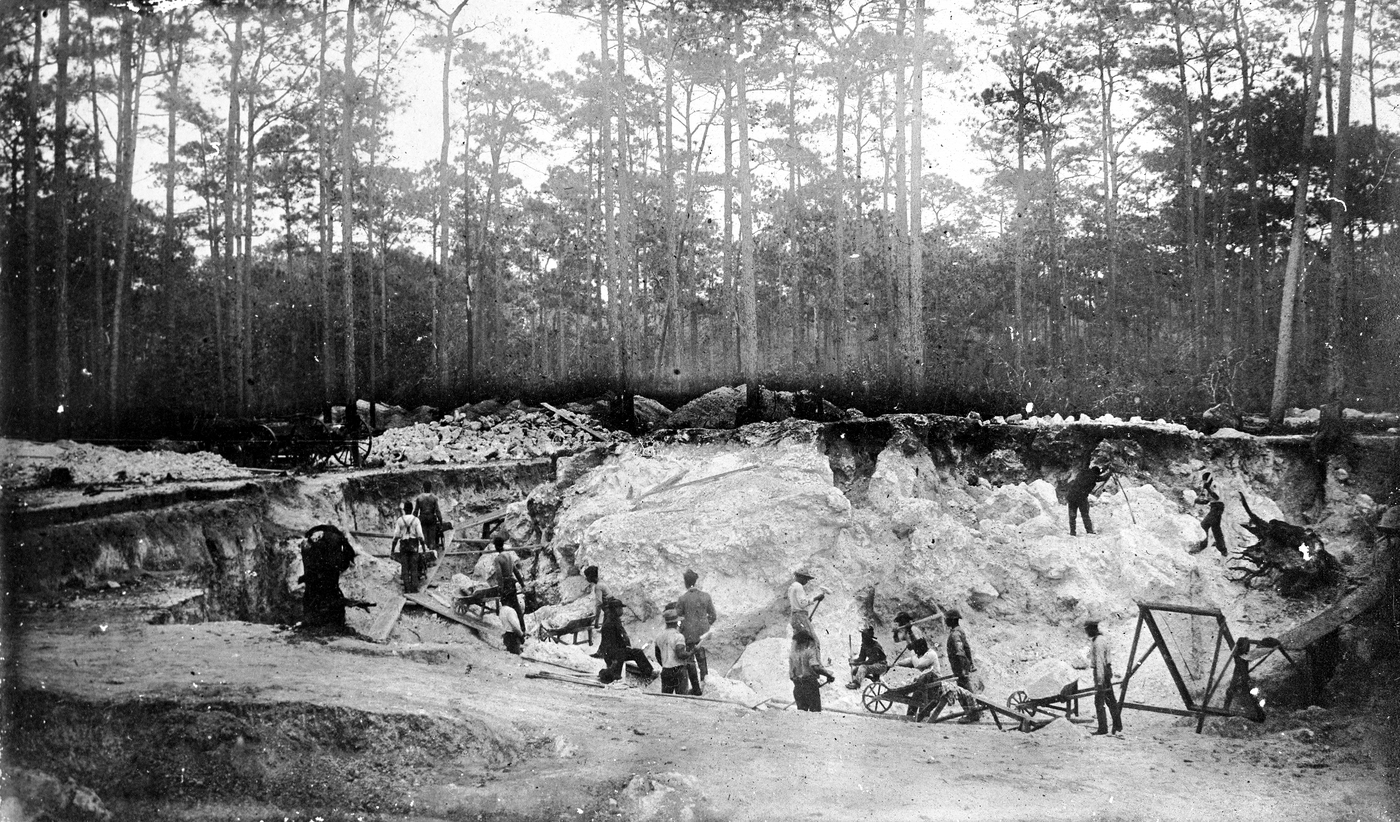

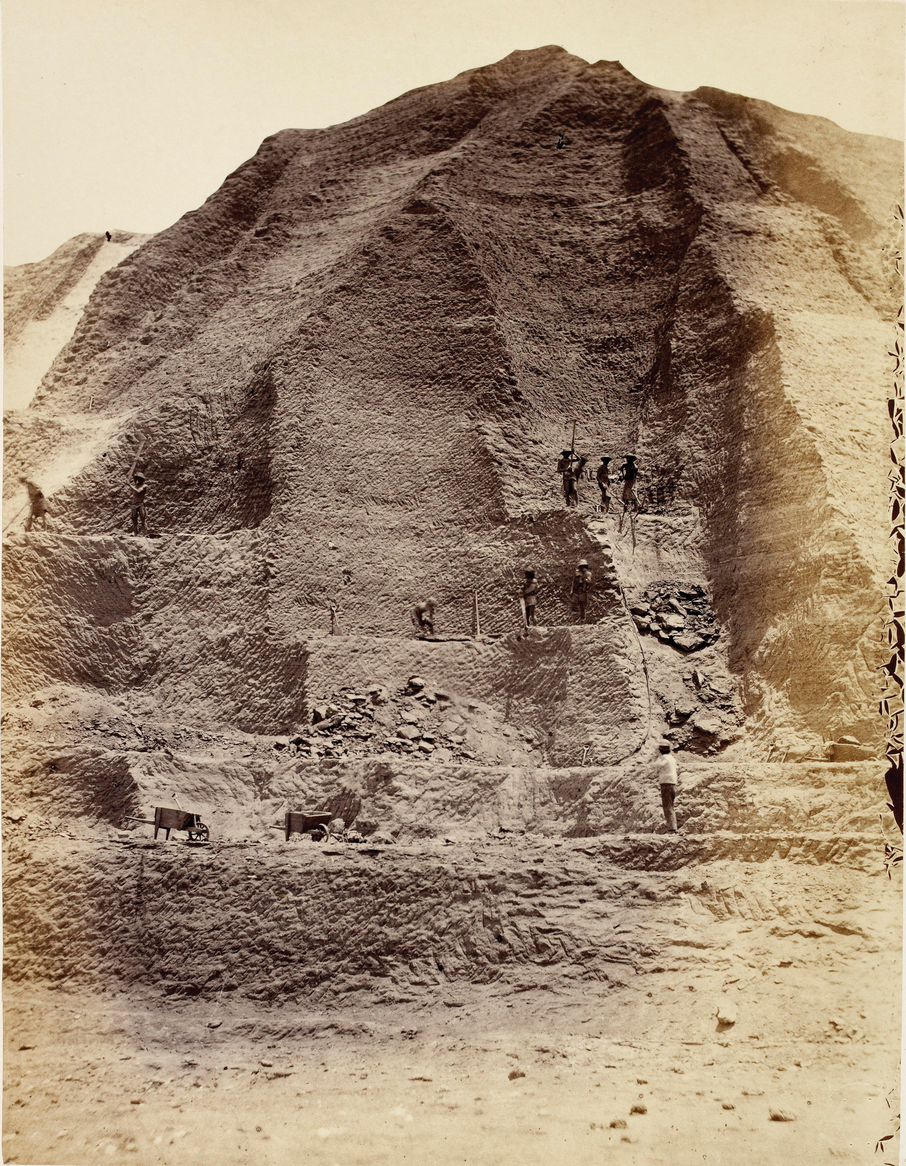

Eventually, mineral deposits of phosphate rock (apatite) were discovered, developed, and substituted for bones in superphosphate production. The P fertilizer industry entered the industrial era as geologic deposits of phosphate rock became readily available and accessible around the world (e.g., England, 1847; Norway, 1851; France, 1856; USA, 1867; Tunisia, 1897, Morocco, 1921; and Russia, 1930). All common P fertilizers are now produced from sedimentary or igneous phosphate rock deposits. The commercial development of rock phosphate deposits in South Carolina shortly after the Civil War added a much-needed new nutrient source to the global fertilizer market. These deposits are relatively shallow, and the mined rock was extracted by shovel using human labor. Later discovery of rock P deposits in Florida in the 1880s added more capacity to the fertilizer market. This vast Florida resource continues to be mined and remains an important resource (Figures 3 and 4). Additional rock P was mined in the vicinity of Nashville, TN at the end of the 19th century. Rock P resources in the Western U.S. states of Utah, Wyoming, and Idaho were discovered around this same time and are still in use today. Additional P resources were developed in North Carolina in the 1960s.

Most sources of phosphate rock are too insoluble for direct use as a P source for plants. A few unique phosphate rock deposits are suitable for direct application to soil without processing with acid. These P sources are best suited for use with perennial crops growing in low-pH soils where the existing acidity and low soil Ca concentrations help speed rock dissolution and P release.

Although superphosphate quickly became the dominant P fertilizer in the world for more than 100 years, it is no longer widely used (with the notable exception of pastures in Australia and New Zealand). Modern P fertilizers were developed, such as triple superphosphate (40–46% P2O5) and ammoniated phosphates (MAP 50–52% P2O5; DAP 46–53% P2O5, APP 34–37% P2O5), to provide readily soluble phosphate in a high-analysis, efficiently transported fertilizer.

Potash (K)

Early potash supplies came from leaching and collecting potassium carbonate (K2CO3) salts from wood ash after burning vast hardwood forests. Potash was in high demand for glass making and soap production. Harvesting kelp was another early source of potash. Some kelp was used directly as fertilizer, but most of the harvested kelp was burned to collect concentrated potash for industrial purposes.

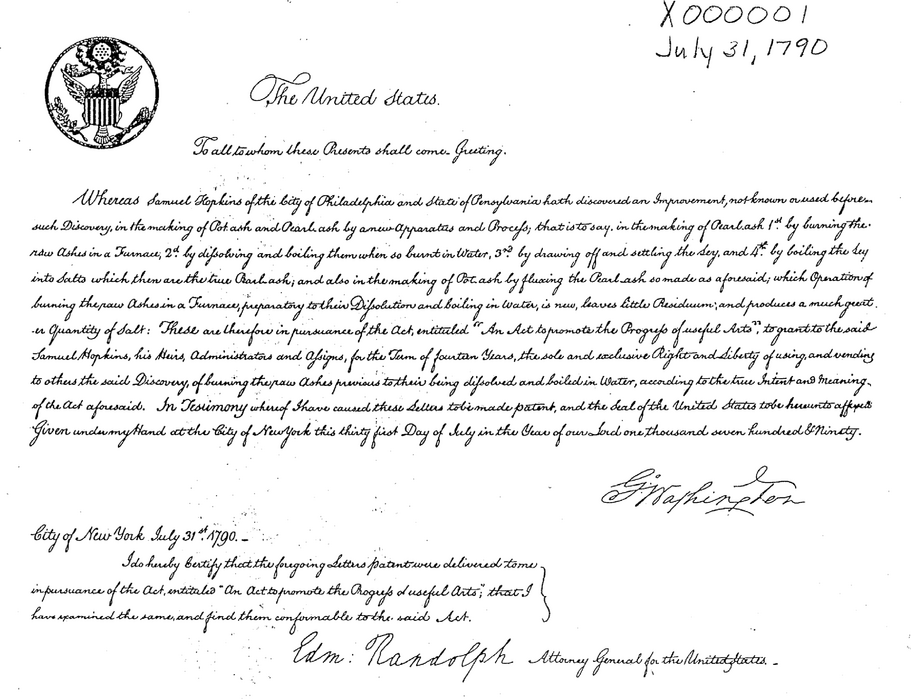

Potash production was an important income source for early North American colonists as forests were cleared and access to ports made shipping to Europe feasible. Income derived from potash sales after clearing and burning forests often provided the necessary financial support for pioneer families during the first years while a new farm was established. Asheries were common businesses in U.S. frontier settlements. The first patent issued by the U.S. Patent Office in 1790 was granted to Samuel Hopkins for an improved process of extracting potash (Figure 5).

As K essentiality for plant nutrition was recognized in the 19th century, demand for K fertilizer greatly expanded. Large-scale K mining was made possible with technology from the industrial revolution, making potash more affordable and available for farmers. The early supply of mined potash was from the Stassfurt region of Germany (1860s), which still has an active K-mining industry.

The potash trade between North America and the German Kalisyndicate potash cartel was halted by World War I. This abrupt potash shortage prompted urgent development of new K sources in the U.S. in the early 1900s. Potash was extracted from brines in the Western Sandhills of Nebraska. At the peak, there were 10 plants operating in the region with a dedicated railroad line for transportation. In California, kelp harvesting was an important source of K during the early 1900s. Kelp was also a source of acetone, which was important for the war effort. Potassium and boron-rich brines from the Searles Lake region of California were extracted for commercial fertilizers and industrial chemicals. In New Mexico, commercially valuable deposits were developed near Carlsbad where potash mining continues today. Other deposits were developed in Michigan and Utah. Utah remains a producer of potash from the Great Salt Lake and from underground deposits in southeastern Utah. The Michigan deposit is under development again.

Following World War II, the largest global potash deposits were discovered at depths of 1,000 m or more in Saskatchewan, Canada with commercial production beginning by 1960. Saskatchewan continues to be a major supplier for North American potash requirements and is the largest potash exporter in the world.

Nitrogen (N)

Guano, fecal excrement from seabirds or bats and a source of N and other nutrients, began to be imported into the U.S during the 1820s and became the leading source of fertilizer for the next half century (Figure 6). Its value was recognized in South America and Europe even earlier. Guano commercialization spurred settlement of remote bird-populated islands. The first imported guano fertilizers contained between 12–14% N and 10–12% P2O5. The nutrient concentration of imported guano declined over the decades as the higher-quality resources were depleted and poorer sources of guano began to be mined. Competition for this valuable organic resource led to wars between Peru and Chile, later extending to Bolivia and Ecuador. The U.S. also engaged its Navy to secure strategic guano islands vital to domestic crop production.

As geologic sources of P and K were being developed, a severe shortage of N fertilizer remained a limiting factor in the search for balanced plant nutrition. As guano deposits were depleted, geologic deposits of nitrate salts developed in the arid Atacama Desert of Chile became an important N source for munitions and agriculture. Organic N-containing sources also provided an important resource, primarily derived from sources such as “animal tankage” (animal parts not suitable for human consumption were dried and ground), cottonseed meal, fish waste, and dried blood. Ammonium sulphate was increasingly used after the 1860s since it was a by-product of the coal/coke and gas-light industries. Nitrogen fertilizers were produced in various quantities using hydroelectric plants to produce fixed N, beginning in locations such as in Niagara Falls, NY (1902), Norway (Norsk-Hydro, 1904) and Muscle Shoals, AL (1917), among others (Ernst, 1928).

Farmers were aware of the great yield and quality benefits from adding N to crops, but N supplies were limited and expensive. The search for additional N resources led to one of the most important discoveries of the 20th century when German scientists Fritz Haber and Carl Bosch collaborated in developing and commercializing the modern ammonia synthesis process known as Haber-Bosch ammonia synthesis (1913). This process reacts hydrogen gas (H2) with atmospheric N2 gas in the presence of an iron catalyst under high temperature and pressure to produce ammonia. The process became an efficient, economical process for making the large quantities of nitrogen fertilizer needed to feed an expanding global population. Haber-Bosch ammonia synthesis is still the predominant production process today.

There was limited ammonia production in the U.S. prior to the 1950s, but much of it was used for industrial purposes and munitions. As the ammonia factories were converted to peaceful purposes after World War II, many of the petroleum companies became the major U.S. producers of ammonia. Methane became the major feedstock for producing hydrogen gas through a “cracking” process called steam reforming (CH4 + H2O CO + 3H2; 3H2 + N2 2 NH3). The energy companies found it profitable to upgrade methane to more valuable ammonia fertilizer.

Direct application of anhydrous ammonia was not practical on farms during this time due to complications of transportation, handling, and field application. Since much of the ammonia was already being converted to ammonium nitrate for munitions, large amounts of ammonium nitrate fertilizer became available for crop nutrition. Gradually, urea production and use began to displace ammonium nitrate as the predominant nitrogen fertilizer. By 1975, urea became the leading N fertilizer in the world. Direct ammonia application, popular in parts of North America today, has remained largely a North American practice.

A surge in ammonia production occurred through the 1950s and 1960s, especially in response to the increasing demand for nitrogen fertilizer in Midwest U.S. corn production and for cotton production in the Southeast. The consumption of N fertilizers grew from 2.7 million tons in 1960 to 7 million by 1969. There were 67 companies producing ammonia in 110 facilities by this time. As the U.S. production capacity rapidly expanded, ammonia prices dropped from $90/ton to $20/ton, making overproduction and poor economic returns a major problem for the new fertilizer industry.

Sulfur (S)

The process of solubilizing phosphate from insoluble apatite minerals requires acidifying with sulfuric acid. As the early P fertilizer industry was growing, it was dependent on sulfur imports to process the rock phosphate. Sulfur deposits from the U.S. Gulf resources were later discovered and then followed by technology for extracting elemental S from by-products such as pyrite and petroleum, relieving the shortages of sulfur. Sulfur was also available in common fertilizers such as potassium sulfate, calcium sulfate, ammonium sulfate, single superphosphate, and langbeinite, a potash mineral.

Micronutrients

Awareness of the importance of micronutrients in specific soil conditions grew from 1925 to 1950. Much of the initial micronutrient research occurred in the areas of high-value crop production, such as Florida and California. Plant stunting and chlorosis had been identified for many years before the cause was identified and appropriate nutrient sources were developed. During this period, there were many breakthrough discoveries regarding the essential role of plant micronutrients. Most micronutrient fertilizers are derived from nutrients recovered from by-products of other industries.

As micronutrient needs were identified, processes for obtaining fertilizers from various industrial by- products were developed.

In 1933, the Tennessee Valley Authority began the National Fertilizer Development Center with the mandate to increase the efficiency of national fertilizer manufacture and use. It operated until the 1990s, and a spin-off organization still operates in Muscle Shoals, AL as the International Fertilizer Development Center.

Most fertilizers were applied as a mixture of individual nutrient sources. In the 1950s TVA engineers developed technology to make homogeneous granular fertilizers that contain multiple nutrients within a single particle. By the early 1960s, 250 granular NPK plants were operating to meet the demands of the market for materials such as 8-24-24, 15-15-15, or 10-20-30. Eventually, homogeneous granular fertilizers were largely replaced by custom, prescription blends of individual fertilizer materials such as urea, ammoniated phosphate, and potash to meet specific soil and crop needs. As soil testing and prescriptive fertilizer application became common, the use of homogeneous granular fertilizers increased.

Plant Nutrition Science

The scientific foundation for improved plant nutrition emerged slowly in the U.S. with the establishment of state agricultural colleges funded by the Morrill Act of 1862. However, agricultural science was largely overshadowed by the academic engineering programs in the early years since agriculture was not considered a highly desirable occupation. The 1887 Hatch Act provided each agricultural college with $15,000/year to develop an agricultural experiment station. The passage of the 1914 Smith-Lever Act established an extension department at each land grant college. With these pieces of legislation in place, the stage was set for rapid progress in improving the crop nutrition status of U.S. cropland. The USDA Bureau of Soils was also given the mandate to investigate the effect of soil properties on crop production, search for new sources of domestic nutrients, and begin the national soil survey project.

Following World War II, soil testing gradually became more widespread. Land grant institutions widely promoted this practice to improve nutrient efficiency and boost crop yields using newly available and affordable fertilizer materials. Each state pursued rapid advances in laboratory analysis, calibration, and fertilizer recommendations as farmers were encouraged to test their soil. For example, the State of Kansas set up a network of 60 individual county soil-testing laboratories operating across the state in 1960. Since then, the number of state-supported soil-testing laboratories has decreased while the private soil-testing industry has expanded. Both the public and the private testing services provide valuable and unique functions for farmers.

The development of the modern North American soil-testing service industry is one of the great accomplishments in agriculture with many millions of agricultural soils tested in laboratories annually. Many hurdles remain to further improve accurate assessments and predictions of crop nutrient requirements, but the successful model of the North American soil-testing industry has been replicated around the world.

The emergence of the modern fertilizer industry has been called one of the greatest contributions to the welfare of humanity in the 20th century. During this time, we have seen tremendous yield and quality increases in all our major food crops and alleviation of widespread hunger and famine. Nutrient use efficiency has also increased due to a combination of factors (such as improved fertilizer materials, precision agriculture, analytical tools, farm equipment, genetics, and pest control). However, huge challenges remain as loss of nutrients to the environment remains unacceptably large and a renewed effort is needed for greater resource conservation.

The next article in this series will examine the current state of the global fertilizer industry and how it supports modern food production. A third article in the series will explore the future of the fertilizer industry and suggest steps needed to sustain proper crop nutrition far into the future.

Further Reading

Ciceri, D., Manning, D.A.C., & Allanore, A. (2015). Historical and technical developments of potassium resources. Science of the Total Environment, 502, 590–601.

Dixon, M.W. (2018). Chemical fertilizer in transformations in world agriculture and the state system, 1870 to interwar period. Journal of Agrarian Change, 18, 768–786.

Emsley, J. (2002). The 13th element: the sordid tale of murder, fire, and phosphorus. Trade Paper Press.

Ernst, F.A. (1928). Fixation of atmospheric nitrogen. Industrial Chemical Monograph. Chapman & Hall.

Hager, T. (2008). The alchemy of air: A Jewish genius, a doomed tycoon, and the scientific discovery that fed the world but fueled the rise of Hitler. Crown Publishing Group.

Nelson, L.B. (1990). History of the U.S. fertilizer industry. Tennessee Valley Authority.

Russel, D.A., & Williams, G.G. (1977). History of chemical fertilizer development. Soil Science Society of America Journal, 41, 260–265.

Self-Study CEU Quiz

Earn 1.5 CEUs in Nutrient Management by taking the quiz for the article at https://web.sciencesocieties.org/Learning-Center/Courses. For your convenience, the quiz is printed below. The CEU can be purchased individually, or you can access as part of your Online Classroom Subscription.

- It became widely known that certain soil additives could benefit plant growth

- in the Mesopotamia river basin of Iraq and Egypt.

- by the early 1800s.

- by the early 1900s.

- after WWII.

- Bird and bat feces were a significant source of nitrogen from crop production

- because these animals can absorb N2 gas through their wings and excrete it with the feces.

- through much of the 1800s.

- until the discovery that it possessed levels of heavy metals that forced limitations on its use.

- All of the above.

- None of the above.

- Soon after urea was developed, it became the leading nitrogen fertilizer in the world because

- anhydrous ammonia was difficult to transport and handle.

- urea provided a safe, dry, and high analysis source of nitrogen that could be handled by most farmers and fertilizer suppliers.

- anhydrous ammonia use is primarily a North American practice with limited utility in other areas of the world.

- All of the above.

- None of the above.

- Petroleum companies were the major producers of ammonia after WWII because

- they possessed the financial wherewithal to install ammonia synthesis equipment.

- they possessed the storage vessels required to store ammonia.

- it was more profitable to upgrade their methane supplies to ammonia for fertilizer production.

- All of the above.

- None of the above.

- Which U.S. government legislation is largely responsible for establishment of many land grant state colleges that set the stage for establishment of crop nutrition as a “science”?

- The Morrill Act of 1862.

- The Hatch Act of 1887.

- The Haber-Bosch Act of 1913.

- The Smith-Lever Act of 1914.

- The Agricultural Act of 2014.

- The widespread promotion and development of soil-testing labs and nutrient extraction processes, respectively, were largely attributable to Land Grant University Institution Initiative.

- True.

- False.

- The North American model for developing a soil test analysis industry to guide producer fertilization strategies has been replicated around the world.

- True.

- False.

- Private soil-testing labs now provide a significant portion of the soil test analysis that are conducted across the U.S.

- True.

- False.

- The mineral commonly mined as rock phosphate is called

- sylvanite.

- gypsum.

- apatite.

- guano.

- Direct application of rock phosphate as a fertilizer without processing is best suited for

- container plants.

- calcareous, fine-textured soils.

- low organic matter soils.

- acid, sandy soils.

- The earliest potassium fertilizer materials came from

- shallow surface mines in the southeast U.S.

- underground mines in Saskatchewan.

- wood ashes.

- Dead Sea salt brines.

- Besides nitrogen gas, the second key ingredient in ammonia synthesis is

- sulfuric acid.

- hydrogen gas.

- oxygen gas.

- hydrochloric acid.

- Sulfuric acid is used as an ingredient in manufacturing which of these fertilizers?

- Anhydrous ammonia.

- Muriate of potash.

- Monoammonium phosphate.

- None of the above.

- Most nutrient sources for micronutrient fertilizers are derived from

- Great Salt Lake brines.

- By-products from other industries.

- Underground hard-rock mining.

- Sea kelp.

- The International Fertilizer Development Center (IFDC) originated with

- the Hatch Act.

- the German Kalisyndicate.

- U.S. Department of Agriculture (USDA).

- The Tennessee Valley Authority (TVA).

Text © . The authors. CC BY-NC-ND 4.0. Except where otherwise noted, images are subject to copyright. Any reuse without express permission from the copyright owner is prohibited.